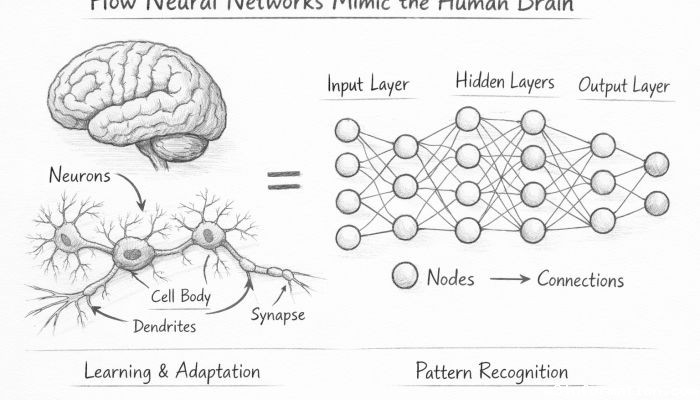

How Neural Networks Mimic the Human Brain

The human brain has been a source of wonder for centuries, an enigma to scientists and engineers. Its ability to process complex information, adapt to novel experiences, and learn is unparalleled. To create machines that could potentially match the brain in learning and reasoning, the concept of artificial neural networks (ANNs) was developed, now a significant area within artificial intelligence (AI). Neural networks are designed to emulate the learning capabilities and decision-making process of the human brain, allowing computers to solve complex problems and make predictions. In this article, we will examine in detail how neural networks take inspiration from the human brain, delving into their structure, functionality, and learning processes to create increasingly intelligent systems.

- Understanding the Biological Neuron

- The Artificial Neuron: A Simplified Model

- Network Architecture: Layers of Neurons

- Synaptic Weights and Learning

- Activation Functions: Non-Linearity in Neurons

- Feedforward Propagation: Signal Flow in the Network

- Backpropagation and Error Correction

- Parallel Processing in Neural Networks

- Pattern Recognition and Generalization

- Limitations Compared to Biological Networks

- Beyond the Basics: Spiking Neural Networks and Neuromorphic Computing

- Ethical and Philosophical Implications

- Conclusion

- More Related Topics

Understanding the Biological Neuron

To appreciate how neural networks mimic the brain, one must first understand the neuron, the brain’s basic unit. A biological neuron consists of a cell body (soma), dendrites, and an axon. Dendrites receive signals from other neurons at junctions called synapses. These signals are transmitted to the soma, where they are combined. If the combined signal strength exceeds a certain threshold, the neuron will fire, sending an electrical impulse down its axon to activate connected neurons. The entire process allows the brain to process and transmit information, resulting in cognition, memory, and learning.

The Artificial Neuron: A Simplified Model

Artificial neurons, also known as perceptrons, are a simplified model of their biological counterparts. An artificial neuron receives input (signals from other neurons) through its dendrites, which in the artificial model are simply the inputs. Each input has an associated weight that scales its value. The neuron then sums the weighted inputs and applies an activation function to this sum to determine its output. The artificial neuron does not have a physical soma or axon, as it processes information purely through mathematical operations. The activation function is key—it introduces non-linearity, allowing the network to learn complex patterns and make non-binary decisions, akin to the firing mechanism of a biological neuron.

Network Architecture: Layers of Neurons

Neural networks consist of layers of neurons interconnected to one another, similar to the complex network of neurons in the human brain. There is an input layer, one or more hidden layers, and an output layer. The input layer receives the initial data, which then flows through the hidden layers, each composed of artificial neurons that process the information further before reaching the output layer that produces the final result. This structure enables the network to capture and model complex relationships within data, similar to how different areas of the brain process information in a hierarchical manner.

Synaptic Weights and Learning

In the brain, synapses can strengthen or weaken, a phenomenon that is central to learning and memory. Neural networks simulate this with adjustable weights. During training, these weights are tuned to reduce the difference between the network’s prediction and the actual output, allowing it to learn from the data. The weights are typically optimized using a process called backpropagation, which involves calculating gradients that inform how weights should be adjusted to improve the network’s performance.

Activation Functions: Non-Linearity in Neurons

Activation functions in artificial neurons play a role similar to the firing threshold in biological neurons. They help introduce non-linearity into the network, enabling it to handle more complex tasks than what a linear model could achieve. Common activation functions include sigmoid, tanh, and ReLU (Rectified Linear Unit). These functions take the weighted sum of the inputs and transform it into the neuron’s output, which is then passed on to the next layer, analogous to how biological neurons activate and process signals.

Feedforward Propagation: Signal Flow in the Network

Signal flow in neural networks is somewhat analogous to how sensory information travels through the brain. In a process called feedforward propagation, data enters the network through the input layer and is then passed on to subsequent hidden layers. Each neuron in these layers processes the incoming data and passes the result to the next layer, continuing until the final output layer generates the prediction or decision. This unidirectional flow of information through the network layers allows the system to transform raw input into a meaningful output.

Backpropagation and Error Correction

Backpropagation is a key learning algorithm in neural networks and is conceptually similar to the brain’s method of learning from experience. It is used to adjust the synaptic weights of the network in response to the error of its output. This algorithm calculates the gradient of the loss function with respect to each weight by the chain rule, commonly using gradient descent. This process iteratively reduces the error, fine-tuning the network’s performance, akin to how the brain adjusts its synaptic strengths when learning from mistakes or feedback.

Parallel Processing in Neural Networks

The brain’s efficiency in processing complex tasks is partly due to its ability to conduct thousands of operations simultaneously. Neural networks, too, have some level of parallelism when processing data or learning. Many neurons in a network can work concurrently during training and inference, which allows for efficient handling of large datasets and contributes to the speed of tasks like image recognition or natural language processing. The parallelism in neural networks is a scaled-down version of the brain’s, made possible today by GPUs or TPUs, yet the principle of concurrent processing is a shared trait between the two.

Pattern Recognition and Generalization

One of the human brain’s most remarkable features is its capacity for pattern recognition. Neural networks exhibit a similar capability. When trained on a dataset, they learn to identify patterns and generalize from specific examples to make predictions on new, unseen data. This process of learning and generalization is at the heart of many applications of neural networks, from facial recognition in images to understanding language in text or speech.

Limitations Compared to Biological Networks

Despite many similarities, artificial neural networks are still far simpler than the human brain. Neurons in the brain are more complex and varied, and the learning and plasticity that occur in the brain involve a more extensive range of mechanisms and timescales, including biochemical changes in the synapses and even structural changes in the brain. In contrast, neural networks are often digital systems that can require significant power for computation. These limitations are important to acknowledge as researchers strive to develop more advanced, brain-like models.

Beyond the Basics: Spiking Neural Networks and Neuromorphic Computing

Advances in neural network research have led to the development of models that more closely resemble brain function, such as spiking neural networks (SNNs). SNNs simulate the actual timing of neuron spikes, providing a richer and more dynamic model of neural activity. Neuromorphic computing platforms are being developed to implement these networks on hardware that mimics the brain’s structure and efficiency. These technologies hold promise for more brain-like processing power, with potential applications and implications for AI and computing efficiency.

Ethical and Philosophical Implications

The increasing resemblance of neural networks to the human brain also raises a range of ethical and philosophical questions. As machines become more capable of tasks that were once thought to require human-like cognition, such as recognizing faces, understanding language, or even driving cars, we must consider the implications of creating systems that can process information in ways that are similar to living beings. Issues of consciousness, identity, and ethical use of such technology become more pressing as the line between biological and artificial processing blurs.

Conclusion

Neural networks have been designed to approximate the function of the human brain, from their basic neuron models and learning rules to their hierarchical structure and capacity for pattern recognition. Through similarities such as the layering of neurons, the use of weighted connections, and the employment of activation functions, neural networks capture the essence of how the brain learns from experience and makes decisions. Although significant advances have been made in making neural networks more sophisticated and brain-like, such as the development of spiking neural networks and neuromorphic computing, the complexity and power efficiency of the human brain continue to outpace artificial systems. The journey to create machines that can learn and reason like humans is not only a technological endeavor but also a philosophical exploration of the nature of intelligence and consciousness itself.

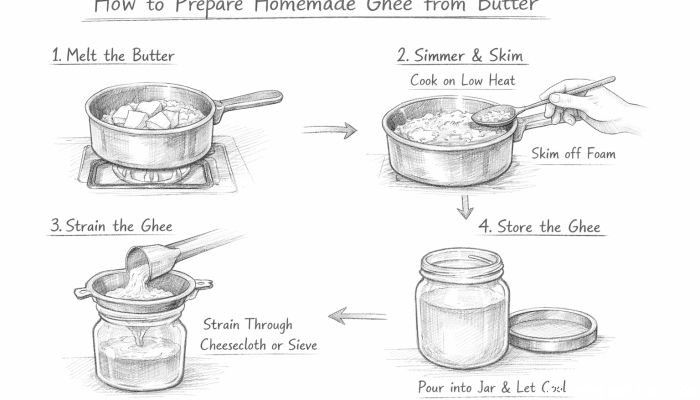

How to prepare homemade ghee from butter

How to prepare homemade ghee from butter

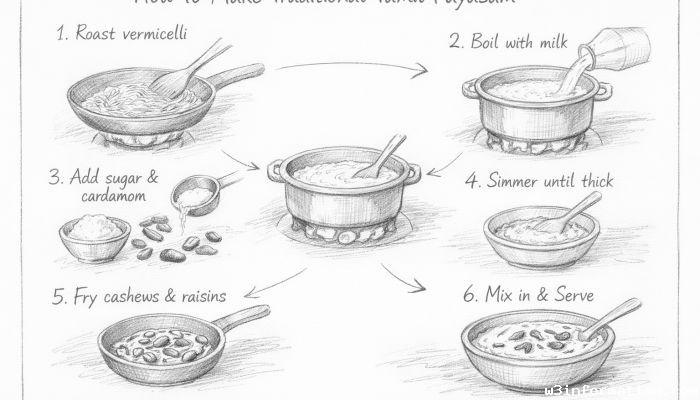

How to make traditional Tamil payasam

How to make traditional Tamil payasam

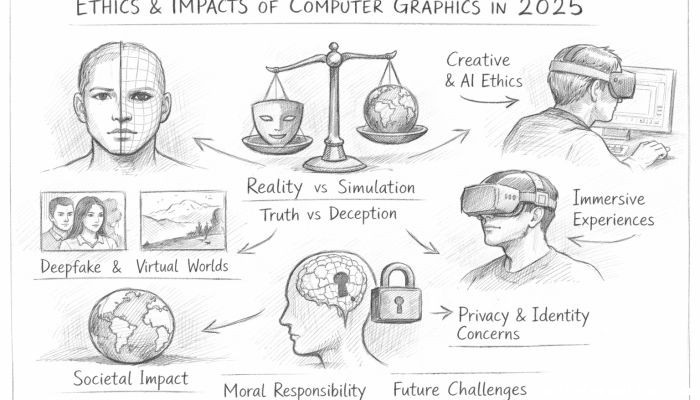

Ethics and Impacts of Computer Graphics in 2025

Ethics and Impacts of Computer Graphics in 2025

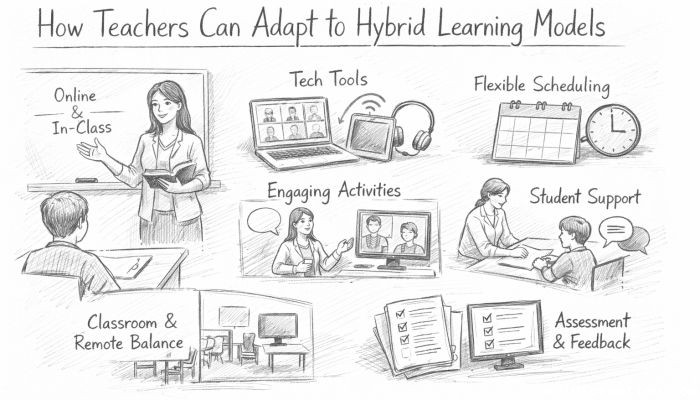

How Teachers Can Adapt to Hybrid Learning Models

How Teachers Can Adapt to Hybrid Learning Models

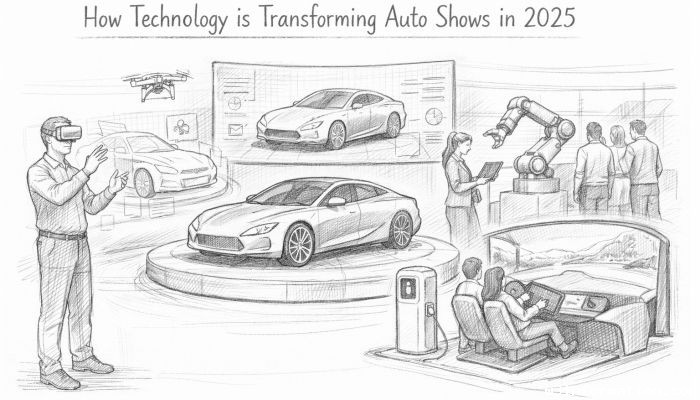

How Technology is Transforming Auto Shows in 2025

How Technology is Transforming Auto Shows in 2025